Learning from Distant, High-level Human Supervision

State-of-the-art neural models have achieved impressive results on a range of NLP tasks but are still quite data hungry to build. Training (or fine tuning) these models towards a specific task/domain may require hundreds of thousands of labeled samples. This puts huge labor burden and time cost on manual data annotation. Going beyond the standard instance-label training design, we are developing next-generation training paradigms for building neural NLP systems. The key ideas are to translate high-level human supervisions into machine-executable, modularized programs for model training, and to reference pre-existing knowledge resources for automatic data annotation. We focus on building new datasets and algorithms for digesting high-level human supervision and making use of distant supervision, in order to accelerate the model construction process and improve label efficiency of current NLP systems.

Learning from Explanations with Neural Module Execution Tree

Ziqi Wang*, Yujia Qin*, Wenxuan Zhou, Jun Yan, Qinyuan Ye, Leonardo Neves, Zhiyuan Liu, Xiang Ren. ICLR 2020.

Project

Github

Learning to Contextually Aggregate Multi-Source Supervision for Sequence Labeling

Ouyu Lan, Xiao Huang, Bill Yuchen Lin, He Jiang, Liyuan Liu, Xiang Ren. ACL 2020

Code

TriggerNER: Learning with Entity Triggers as Explanation for Named Entity Recognition.

Bill Yuchen Lin*, Dong-Ho Lee*, Ming Shen, Xiao Huang, Ryan Moreno, Prashant Shiralkar, and Xiang Ren. ACL, 2020. Github

LEAN-LIFE: A Label-Efficient Annotation Framework Towards Learning from Annotator Explanation

Dong-Ho Lee*, Rahul Khanna*, Bill Yuchen Lin, Seyeon Lee, Qinyuan Ye, Elizabeth Boschee, Leonardo Neves and Xiang Ren. ACL 2020 (demo)

Project

NERO: A Neural Rule Grounding Framework for Label-Efficient Relation Extraction

Wenxuan Zhou, Hongtao Lin, Bill Yuchen Lin, Ziqi Wang, Junyi Du, Leonardo Neves, Xiang Ren. The Web Conference 2020.

Github

AlpacaTag: An Active Learning-based Crowd Annotation Framework for Sequence Tagging

Bill Yuchen Lin*, Dong-Ho Lee*, Frank F. Xu, Ouyu Lan, Xiang Ren. ACL 2019, (System Demo).

Project |

Wiki |

Github |

Poster

Heterogeneous Supervision for Relation Extraction: A Representation Learning Approach

Liyuan Liu*, Xiang Ren*, Qi Zhu, Shi Zhi, Huan Gui, Heng Ji, Jiawei Han. EMNLP 2017.

Github

Project

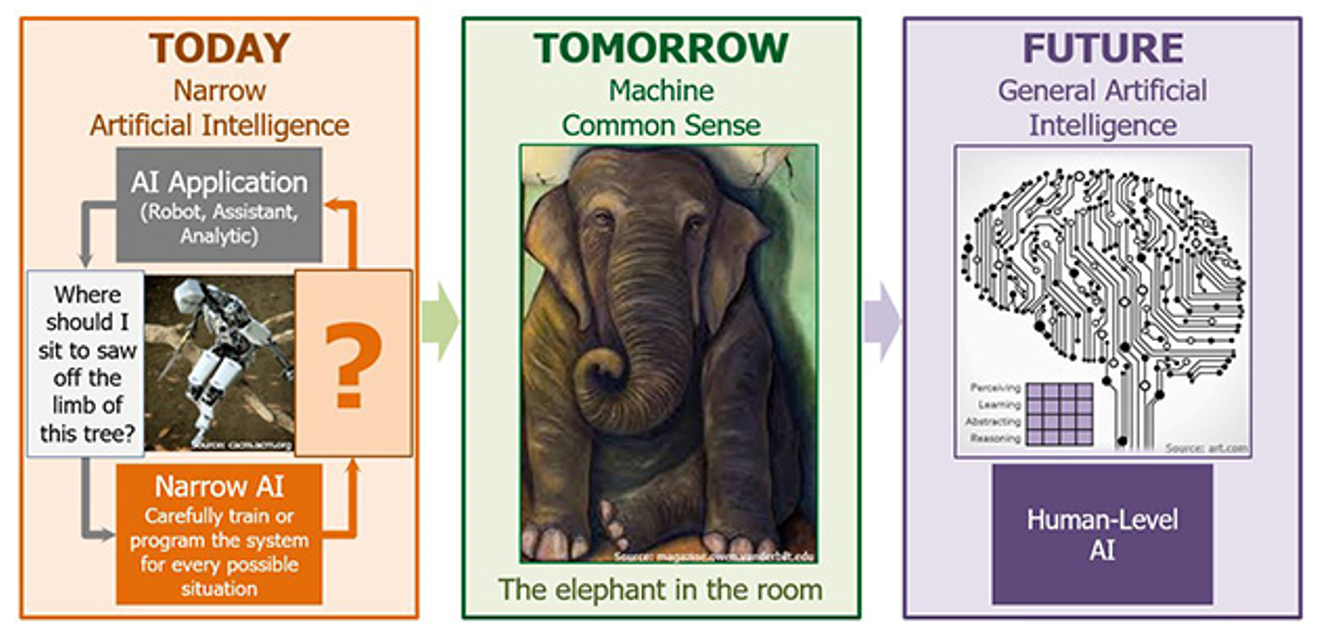

Common Sense Reasoning for Artificial General Intelligence

Humans need commonsense knowledge to make new decisions in everyday situations, while even state-of-the-art AI models can make wrong decisions due to the lack of commonsense reasoning (CSR) ability. To teach machines to think with common sense like humans, we have been developing new reasoning methods and benchmarking datasets for CSR. For multiple-choice reasoning setting, we have focused on knowledge-aware methods that exploit commonsense knowledge graphs with graph neural networks. We have also been studying commonsense reasoning in generative and open-ended setting, which are closer to realistic applications (e.g., dialogue systems, search engines, etc.). Beyond the language modal, we are also studying CSR in multi-modal environments (e.g., language + vision). We hope our research in commonsense reasoning can become fundamental building blocks for future Artificial General Intelligence (AGI) systems.

Pre-training Text-to-Text Transformers for Concept-centric Common Sense Wangchunshu Zhou*, Dong-Ho Lee*, Ravi Kiran Selvam, Seyeon Lee, Bill Yuchen Lin, Xiang Ren ICLR 2021

CommonGen: A Constrained Text Generation Challenge for Generative Commonsense

Reasoning

Bill Yuchen Lin, Ming Shen, Pei Zhou, Chandra Bhagavatula, Yejin Choi, Xiang Ren

EMNLP

2020 (Findings).

Project

Data Huggingface

Leaderboard Code

Media coverage: The Register, Tech Xplore, Techzine, Radio.com, ScienceDaily

Birds have four legs?! NumerSense: Probing Numerical Commonsense Knowledge of Pre-trained Language Models Bill Yuchen Lin, Seyeon Lee, Rahul Khanna, Xiang Ren EMNLP 2020 Project Data Leaderboard Code

Differentiable

Open-Ended Commonsense Reasoning

Bill Yuchen Lin, Haitian Sun, Bhuwan Dhingra, Manzil Zaheer, Xiang Ren, William W. Cohen

NAACL 2021

Scalable Multi-Hop

Relational Reasoning for Knowledge-Aware Question Answering

Yanlin Feng*, Xinyue Chen*, Bill Yuchen Lin, Peifeng Wang, Jun Yan, Xiang

Ren EMNLP

2020

Code

Connecting the Dots: A Knowledgeable

Path Generator for Commonsense Question Answering

Peifeng Wang, Nanyun Peng, Pedro Szekely, Xiang Ren

EMNLP

2020 (Findings)

Project Notebook Tutorial Code Model checkpoints

Learning with Structured Inductive Biases

Deep neural networks have demonstrated strong capability in fitting large dataset in order to master a task, but at the same time also showing poor generalization ability in terms of task/domain transferability. One main reason is because the common mechanisms shared between the tasks (i.e., inductive biases), such as model components and constraints, are not explicitly specified in the model architectures. We are exploring various ways of designing structural inductive biases that are task-general and human-readable, and developing novel model architectures and learning algorithms to impose such inductive biases. This will yield NLP systems that run effectively under low data regime, while demonstrating good task/domain transferability.

Contextualizing Hate Speech Classifiers with Post-hoc Explanation

Brendan Kennedy*, Xisen Jin*, Aida Mostafazadeh Davani, Morteza Dehghani and Xiang Ren. ACL 2020.

Project

Github

KagNet: Knowledge-Aware Graph Networks for Commonsense Reasoning

Bill Yuchen Lin, Xinyue Chen, Jamin Chen, Xiang Ren. EMNLP 2019 (long).

Github

CommonGen: A Constrained Text Generation Dataset Towards Generative Commonsense Reasoning

Bill Yuchen Lin, Ming Shen, Yu Xing, Pei Zhou, Xiang Ren. AKBC 2020.

Leaderboard

Github

Learning from Explanations with Neural Execution Tree

Ziqi Wang*, Yujia Qin*, Wenxuan Zhou, Jun Yan, Qinyuan Ye, Leonardo Neves, Zhiyuan Liu, Xiang Ren. ICLR 2020 (poster).

Towards Hierarchical Importance Attribution: Explaining Compositional Semantics for Neural Sequence Models

Xisen Jin, Zhongyu Wei, Junyi Du, Xiangyang Xue, Xiang Ren. ICLR 2020 (spotlight).

Project |

Github

Knowledge Reasoning over Heterogeneous Data

Rule-based symbolic reasoning systems have the advantage of precise grounding and induction but are short for the fuzzy matching and uncertainty. In contrast, embedding-based reasoning methods are built on data-driven machine learning paradigm and can fit an effective model with large amount of data, while lacking the strength of good generalization. We are working on neural-symbolic reasoning methods to combine fuzzy reasoning with good generalization, and extending the reasoning target from static, graph-structured data to heterogeneous sources such as time-variant graph structures and unstructured text.

Collaborative Policy Learning for Open Knowledge Graph Reasoning

Cong Fu, Tong Chen, Meng Qu, Woojeong Jin, Xiang Ren. EMNLP 2019.

Github

Recurrent Event Network for Reasoning over Temporal Knowledge Graphs

Woojeong Jin, Changlin Zhang, Pedro Szekely, Xiang Ren. ICLR-RLGM 2019.

Github | Survey

KagNet: Knowledge-Aware Graph Networks for Commonsense Reasoning

Bill Yuchen Lin, Xinyue Chen, Jamin Chen, Xiang Ren. EMNLP 2019 (long).

Github

Hierarchical Graph Representation Learning with Differentiable Pooling

Rex Ying, Jiaxuan You, Christopher Morris, Xiang Ren, William L. Hamilton, Jure Leskovec. NeurIPS 2018 (Spotlight).

ArXiv

Github