Overview

Pre-trained language models (PTLM) have achieved impressive results in a range of natural language understanding (NLU) and generation (NLG) tasks. However, current pre-training objectives such as masked token prediction (for BERT-style PTLMs) and masked span infilling (for T5-style PTLMs) do not explicitly model the relational commonsense knowledge about everyday concepts, which is crucial to many downstream tasks that need common sense to understand or generate.

To augment PTLMs with concept-centric commonsense knowledge, in this paper,

1. we propose both generative and contrastive objectives for learning common sense from the text, and use them as intermediate self-supervised learning tasks for incrementally pre-training PTLMs (before task-specific fine-tuning on downstream datasets).

2. Furthermore, we develop a joint pre-training framework to unify generative and contrastive objectives so that they can mutually reinforce each other.

Extensive experimental results show that our method, concept-aware language model (CALM), can pack more commonsense knowledge into the parameters of a pre-trained text-to-text transformer without relying on external knowledge graphs, yielding better performance on both NLU and NLG tasks.

Our new self-supervised objectives

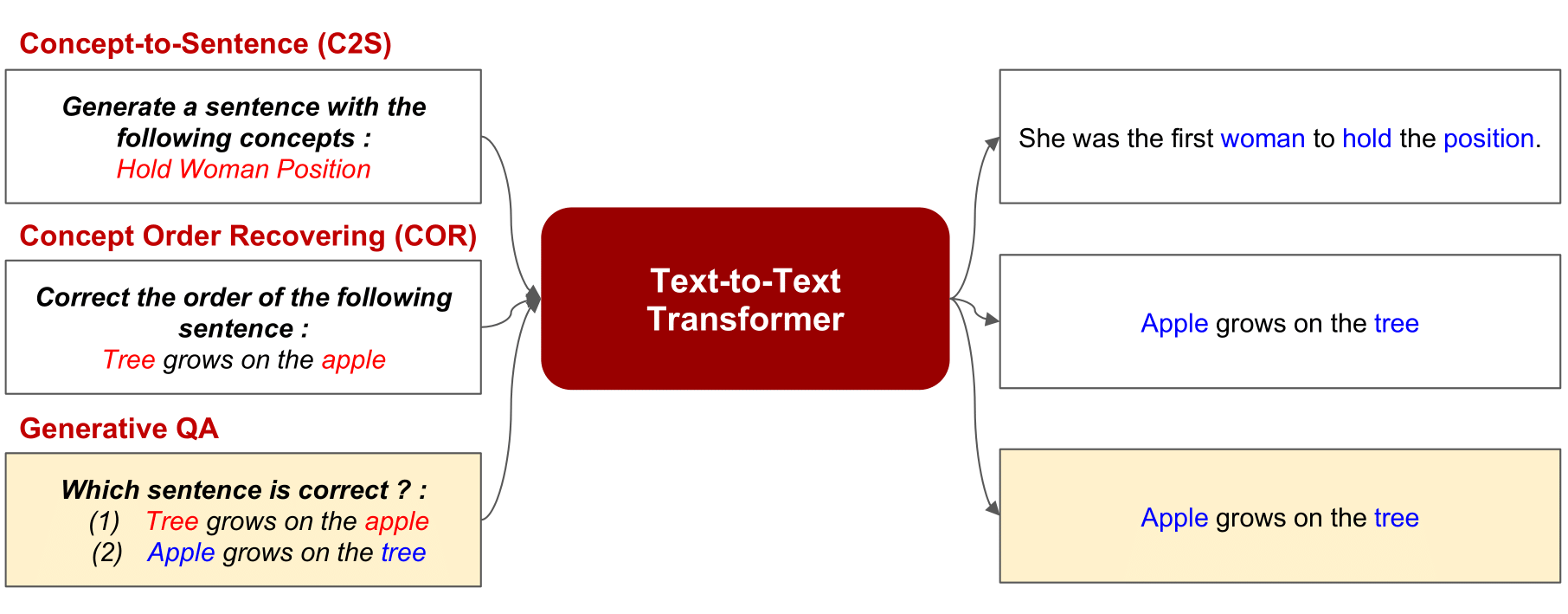

We introduce generative and contrastive self supervised objectives for improving the commonsense reasoning ability of pre-trained text-to-text transformers: Concept-to-Sentence, Concept Order Recovering, Generative Question Answering

Concept-to-Sentence (C2S): Ask model to recover the original sentence given only a few unordered keywords of the sentence.

Concept Order Recovering (COR): Ask model to recover the original sentence given order-of-concept shuffled sentence.

Generative Question Answering: Ask model to distinguish the real sentence from a concept-distracted sentence.

CALM

1. Given an input sentence x ("She was the first woman to hold the position"), extract concept-set C.

2. Given x and C, produce corrupted source sentence x' either for C2S and COR.

3. The generator trained with C2S and COR recovers sentence x' to distractor x''.

4. The discriminator is trained to distinguish truth sentence from distractor x''.

To cite us

@article{zhou2020pre,

title={Pre-training text-to-text transformers for concept-centric common sense},

author={Zhou, Wangchunshu and Lee, Dong-Ho and Selvam, Ravi Kiran and Lee, Seyeon and Lin, Bill Yuchen and Ren, Xiang},

booktitle={International Conference on Learning Representations},

year={2021}

}