CommonGen

A Constrained Text Generation Challengefor Generative Commonsense Reasoning

Bill Yuchen Lin, Wangchunshu Zhou, Ming Shen, Pei Zhou, Chandra Bhagavatula, Yejin Choi, Xiang Ren

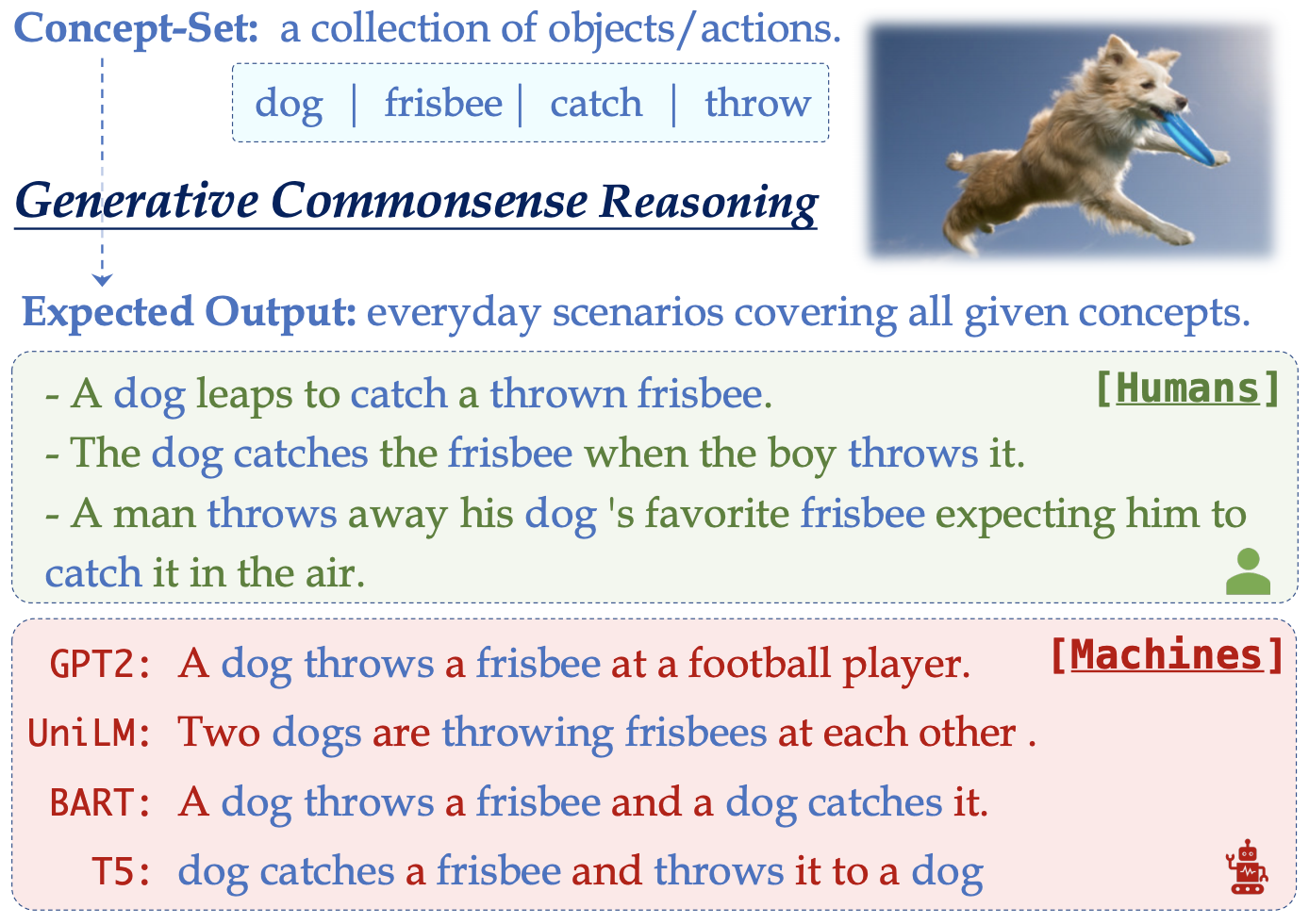

Building machines with commonsense to compose realistically plausible sentences is challenging.

CommonGen is a constrained text generation task, associated with a benchmark dataset, to explicitly test machines for the ability of generative commonsense reasoning.

Given a set of common concepts; the task is to generate a coherent sentence describing an everyday sce- nario using these concepts.

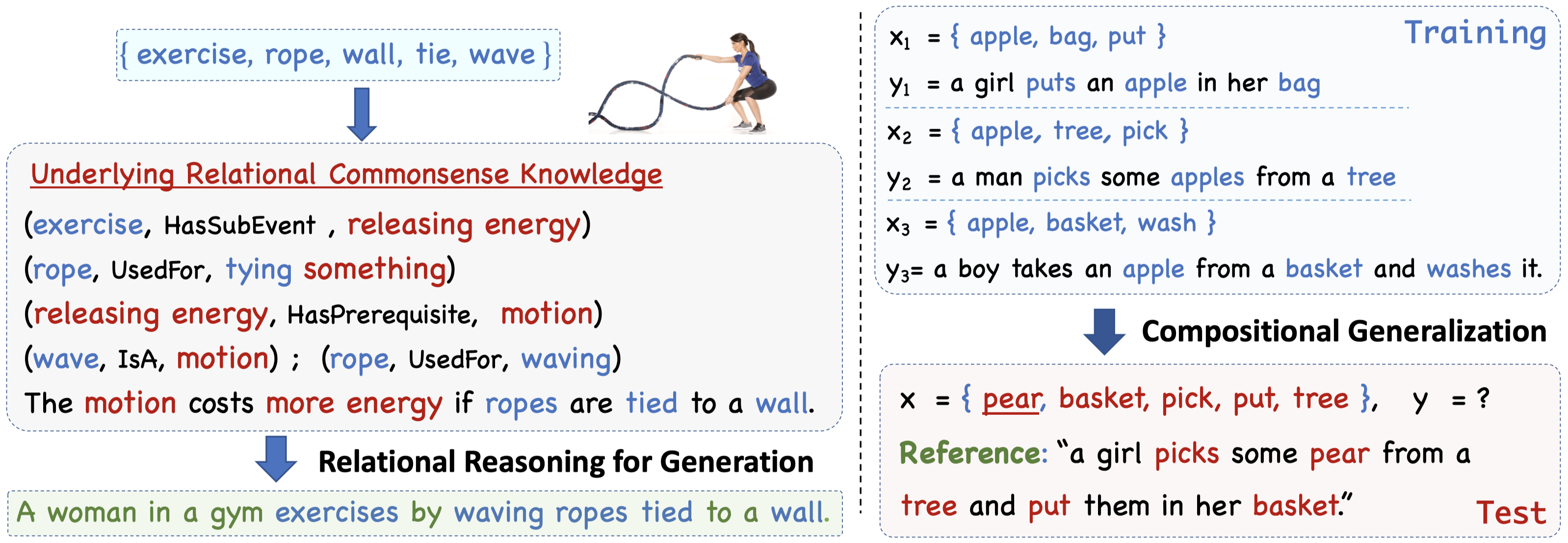

CommonGen is challenging because it inherently requires 1) relational reasoning using background commonsense knowledge, and 2) compositional generalization ability to work on unseen concept combinations. Our dataset, constructed through a combination of crowd-sourcing from AMT and existing caption corpora, consists of 30k concept-sets and 50k sentences in total.

Links:

[Paper]

[Leaderboard]

[CommonGen-Lite]

[Github]

[INK Lab]

Media coverage of CommonGen:

The Register

,

Tech Xplore

,

Techzine

,

Radio.com

,

ScienceDaily

,

USC Viterbi !

@inproceedings{lin-etal-2020-commongen,

title = "{C}ommon{G}en: A Constrained Text Generation Challenge for Generative Commonsense Reasoning",

author = "Lin, Bill Yuchen and

Zhou, Wangchunshu and

Shen, Ming and

Zhou, Pei and

Bhagavatula, Chandra and

Choi, Yejin and

Ren, Xiang",

booktitle = "Findings of the Association for Computational Linguistics: EMNLP 2020",

month = nov,

year = "2020",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.findings-emnlp.165",

pages = "1823--1840",

}