Recurrent Event Network: Autoregressive Structure Inference over Temporal Knowledge Graphs

Woojeong Jin, Meng Qu, Xisen Jin, Xiang Ren, EMNLP 2020Abstract

Knowledge graph reasoning is a critical task in natural language processing. The task becomes more challenging on temporal knowledge graphs, where each fact is associated with a timestamp. Most existing methods focus on reasoning at past timestamps and they are not able to predict facts happening in the future. This paper proposes Recurrent Event Network (RE-Net), a novel autoregressive architecture for predicting future interactions. The occurrence of a fact (event) is modeled as a probability distribution conditioned on temporal sequences of past knowledge graphs. Specifically, our RE-Net employs a recurrent event encoder to encode past facts, and uses a neighborhood aggregator to model the connection of facts at the same timestamp. Future facts can then be inferred in a sequential manner based on the two modules. We evaluate our proposed method via link prediction at future times on five public datasets. Through extensive experiments, we demonstrate the strength of RE-Net, especially on multi-step inference over future timestamps, and achieve state-of-the-art performance on all five datasets.

Links: [Paper] [Github]

Method

We represent TKGs as sequences, and then build an autoregressive generative model on the sequences. To this end, RE-Net defines the joint probability of concurrent events (or a graph), which is conditioned on all the previous events.

Experiments

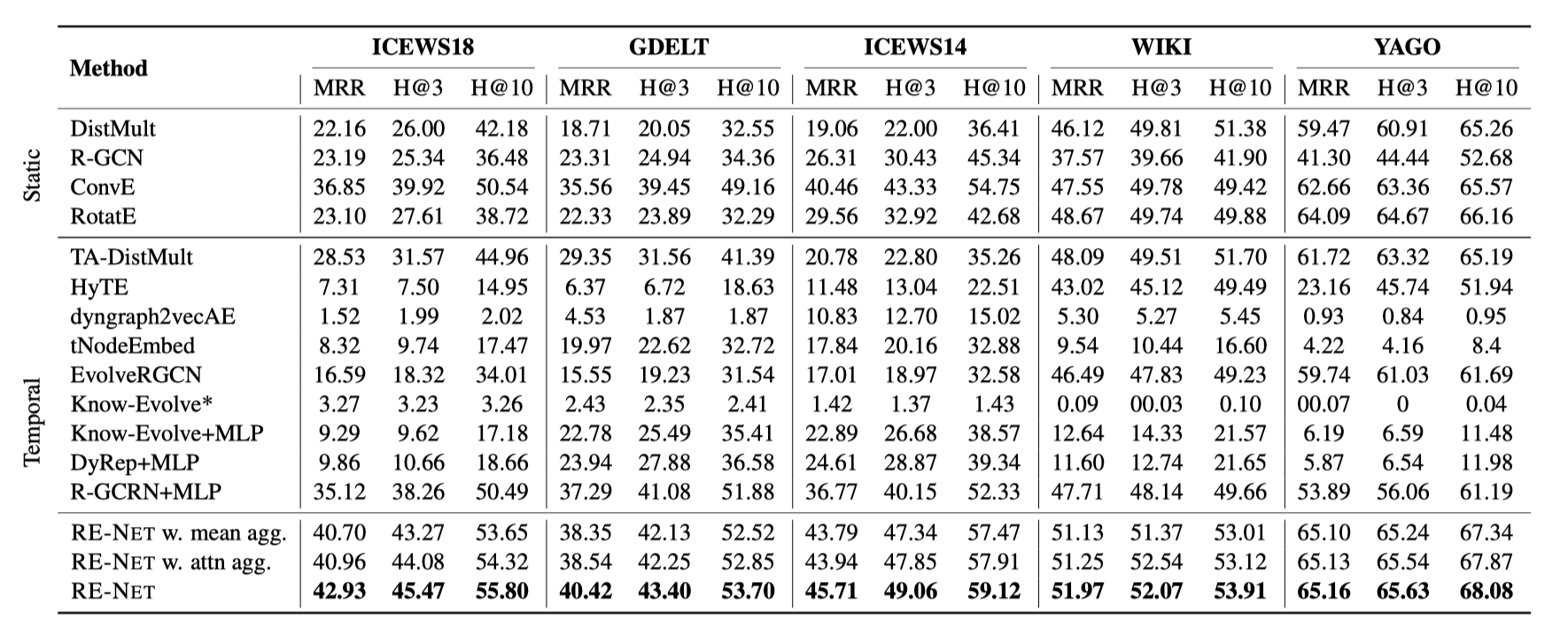

Baselines. We compare our approach to baselines for static graphs and temporal graphs as follows:

(1) Static Methods. By ignoring the edge time stamps, we construct a static, cumulative graph for all the training events, and apply multi-relational graph representation learning methods including DistMult (Yang et al., 2015), R-GCN (Schlichtkrull et al., 2018), ConvE (Dettmers et al., 2018), and RotatE (Sun et al., 2019).

(2) Temporal Reasoning Methods. Know-Evolve (Trivedi et al., 2017), TADistMult (Garcıa-Duran et al., 2018), and HyTE (Dasgupta et al., 2018). Know-Evolve, Dyrep (Trivedi et al., 2019), and GCRN (Seo et al., 2017) combined with our MLP decoder.

Results. We report a filtered version of Mean Reciprocal Ranks (MRR) and Hits@3/10. Our proposed RE-Net outperforms all other baselines on ICEWS18, ICEWS14, and GDELT.

Citation

@@inproceedings{jin2020Renet,

title={Recurrent Event Network: Autoregressive Structure Inference over Temporal Knowledge Graphs},

author={Jin, Woojeong and Qu, Meng and Jin, Xisen and Ren, Xiang},

booktitle={EMNLP},

year={2020}

}