Introduction

We study hierarchical explanations of neural sequence model predictions to help people to understand how these models handle compositional semantics such as stress or negation. We identify the key challenge is to compute non-additive and context-independent importance for individual words and phrases. Following from our formulation, we propose two explanation algorithms: Sampling and Contextual Decomposition (SCD) and Sampling and OCclusion (SOC). We experiment on both LSTM models and BERT Transformer models on sentiment analysis and relation extraction tasks.

Method

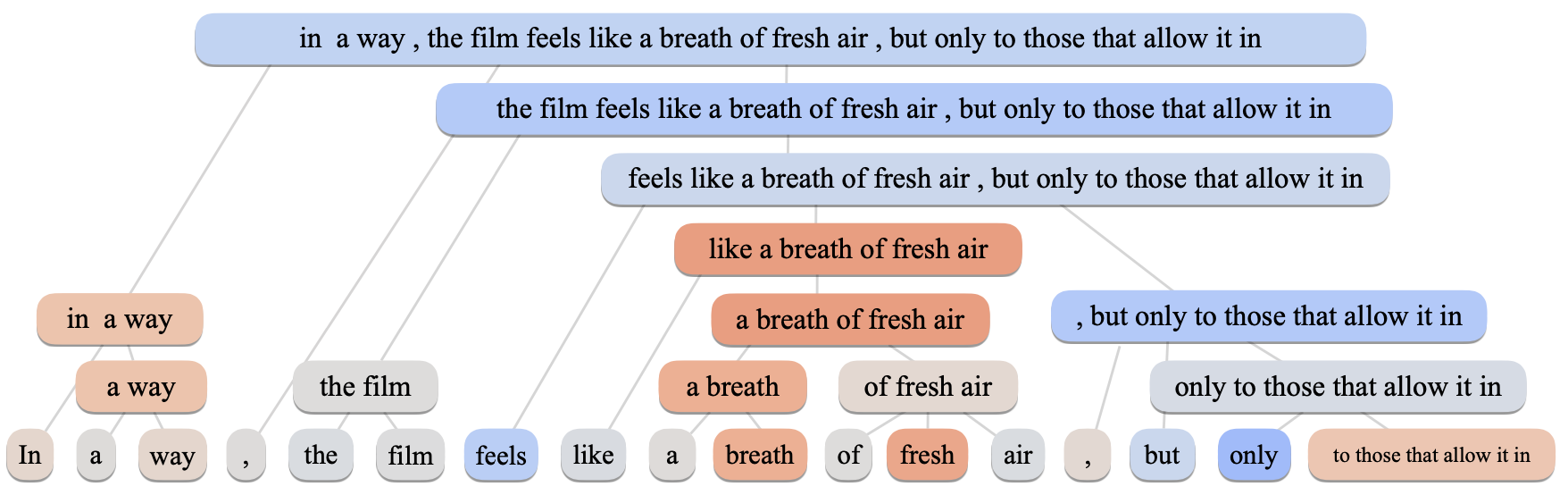

The core of our method is context independent importance of a phrase, defined as the expected activation value change when the phrase is masked out in different contexts. We sample context words of the target phrase with a pretrained language model to approximate the expectation. Then, dependent on the implementation of the masking operation, we perform layer-wise decomposition (SCD) or occlusion (SOC) to obtain importance of the phrase. At the meantime, we generate a hierarchical layout of explanation with agglomerative clustering following Singh et al. 2019.

Experiments

Hierarchical visualizations of model predictions. The visualization explains how the prediction builds up from atomic words to the full sentence. Quantitative evaluation shows that our explanations achieve high correlations with selected reference explanations.

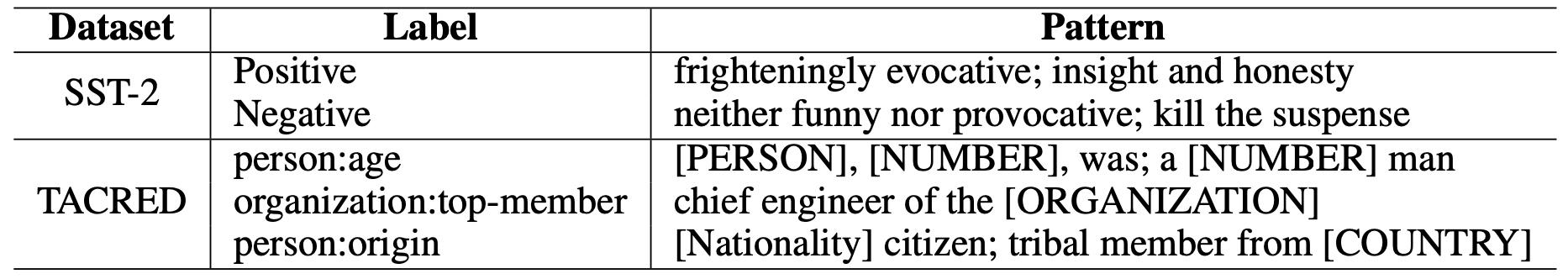

Classification pattern extraction from trained models. The context independence property of our importance attribution makes our explanation a natural fit to extract phrase-level classification patterns from models.

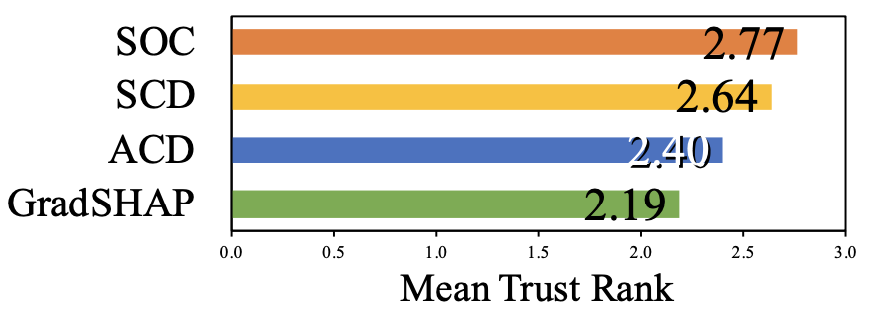

Improving human trust of models. Human evaluation on both LSTM models and BERT models shows our approaches achieves significantly higher human evaluation score than comparators.

To cite us

@inproceedings{

jin2020towards,

title={Towards Hierarchical Importance Attribution: Explaining Compositional Semantics for Neural Sequence Models},

author={Xisen Jin and Zhongyu Wei and Junyi Du and Xiangyang Xue and Xiang Ren},

booktitle={International Conference on Learning Representations},

year={2020},

url={https://openreview.net/forum?id=BkxRRkSKwr}

}

Contact

If you have any questions about the paper or the code, please feel free to contact Xisen Jin (xisenjin usc edu).